ETL or Munge?

Data Munging

Data munging or data wrangling is loosely the process of manually converting or mapping data from one "raw" form into another format that allows for more convenient consumption of the data with the help of semi-automated tools.(https://en.wikipedia.org/wiki/Data_wrangling

ETL

In computing, Extract, Transform and Load (ETL) refers to a process in database usage and especially in data warehousing that:

Extracts data from homogeneous or heterogeneous data sources

Transforms the data for storing it in the proper format or structure for the purposes of querying and analysis

Loads it into the final target (database, more specifically, operational data store, data mart, or data warehouse)

Usually all the three phases execute in parallel since the data extraction takes time, so while the data is being pulled another transformation process executes, processing the already received data and prepares the data for loading and as soon as there is some data ready to be loaded into the target, the data loading kicks off without waiting for the completion of the previous phases. (https://en.wikipedia.org/wiki/Extract,_transform,_load

There is also a design pattern where you to what is referred to as ELT, which is Extract, Load and Transform. In this case the Extract process simply copies source data to a "staging"table, then from the staging table you Transform the data and load it into the final target destination. This can be quite a bit more efficient when dealing with large datasets, even if you are moving the data more than one time.

| Figure 1: Simple schematic for a data warehouse. The ETL process extracts information from the source databases, transforms it and then loads it into the data warehouse. (Photo credit: Wikipedia) |

Transforms the data for storing it in the proper format or structure for the purposes of querying and analysis

Loads it into the final target (database, more specifically, operational data store, data mart, or data warehouse)

Usually all the three phases execute in parallel since the data extraction takes time, so while the data is being pulled another transformation process executes, processing the already received data and prepares the data for loading and as soon as there is some data ready to be loaded into the target, the data loading kicks off without waiting for the completion of the previous phases. (https://en.wikipedia.org/wiki/Extract,_transform,_load

There is also a design pattern where you to what is referred to as ELT, which is Extract, Load and Transform. In this case the Extract process simply copies source data to a "staging"table, then from the staging table you Transform the data and load it into the final target destination. This can be quite a bit more efficient when dealing with large datasets, even if you are moving the data more than one time.

When do you use one versus the other?

The mechanics of each of these processes are incredibly similar. Their use-cases are widely different.

ETL is a process to move data from one repository to another.

Data Munging is taking data from one use-case to another.

If you are doing a regression model, your data needs to reflect a single observation with as many factors as may be appropriate for your regression model for a given observation. While this may mean "Extracting", and "Transforming" data you are not really "Loading" the data anywhere.

You may store an aggregation of the data, like the coefficients, R-squared, or even P-values from your regression model, this is not truly the same thing as an ETL process.

The value of doing machine learning, data science, or statistical modeling on clean, quality data that is stored in a data warehouse, or data mart and leverages the investment the organization makes in using both of these architectural models to make tommorrows data products the most cost effective for the organization.

Data Munging from source data directly without leveraging a data warehouse infrastructure (if it is available) will require the people doing data munging to do some of the same type of work that the ETL team already does.

If there is no data warehouse, data quality, or data management initiative in place, the team doing data munging for data science will be doing some work that could be useful to the wider organization.

They will be creating Data Products that could be useful to other teams. These intermediate Data Products, that may not be their official production Data product should be shared with others.

ETL is a process to move data from one repository to another.

Data Munging is taking data from one use-case to another.

|

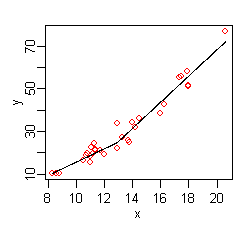

| English: Plot of a simple Friedman's Multivariate Adaptive Splines Regression (MARS) model with one independent variable. (Photo credit: Wikipedia) |

You may store an aggregation of the data, like the coefficients, R-squared, or even P-values from your regression model, this is not truly the same thing as an ETL process.

The value of doing machine learning, data science, or statistical modeling on clean, quality data that is stored in a data warehouse, or data mart and leverages the investment the organization makes in using both of these architectural models to make tommorrows data products the most cost effective for the organization.

Data Munging from source data directly without leveraging a data warehouse infrastructure (if it is available) will require the people doing data munging to do some of the same type of work that the ETL team already does.

If there is no data warehouse, data quality, or data management initiative in place, the team doing data munging for data science will be doing some work that could be useful to the wider organization.

They will be creating Data Products that could be useful to other teams. These intermediate Data Products, that may not be their official production Data product should be shared with others.

Nice post Doug! I've been working with DataMeer on the data munging side of the house, love the tool. One thing I've noticed lately is this:

ReplyDeleteETL / ELT and BI initiatives (including automation and metadata management tools like AnalytixDS Mapping Manager) are focused on the enterprise integration problem.

Data Munging / Data Wrangling tools are highly focused on individual integration problems (not corporate view points).

Thoughts?

Dan

PS: http://wwdvc.com is around the corner, love to see you come back and present this year.

Precisely.

DeleteETL/ELT is for enterprise level data movement. You need something like a data structure graph to keep track of all of the various data movement flows and schedules.

Data Munging (from the way I have used it) is much more about making data that comes from one or more sources fit into a given tool, or algorithm for a use-case that is outside the scope of what Enterprise Integration is doing.

I'll check my schedule for wwdvc. Always good content there!