And now, Big Data...

Big Data has been defined as any data whose processing will not fit into a single machine.

While the assumption generally made is thinking of Hadoop and Big Data to be the same thing, many database systems I have worked on have been clustered.

Oracle, MySQL, SQL Server can all be cluster based.

While Hive is a great tool to leverage your existing SQL knowledge base, limiting your problem solving to SQL based access will impact the types of problems you can solve with your data.

Having been a DBA for many years, I clearly see the advantage of simply adding more machines to your cluster, and either doing a re-balance or suddenly having more space without the need for a data migration.

Early on in my career, if we were running out of space, we would have to go through a request process, then a migration downtime was undertaken to get the new space allocated to the right database.

Now, all you need to do (with Hadoop anyway) is to add another machine to the cluster.

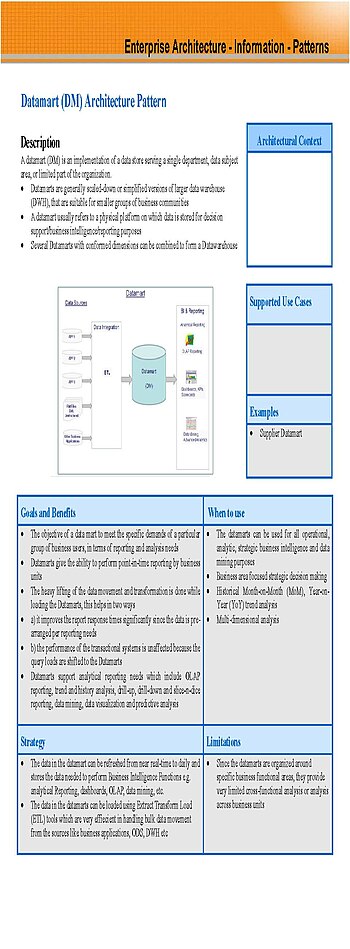

There are some distinct similarities between a Big Data project and a data warehouse.

1) You must have a problem in mind to solve.

2) You must have executive buy in and sponsorship. Even if this is a research project you must have the resources to actually build your cluster, then move data onto the cluster.

3) The data must be organized. This will probably not be a normalization scheme like we saw previously from Codd. You should place similar data near each other. (Logs should be organized in folders for logs, RDBMS extracts should be organized in folders named in a similar manner to the original system schemas.)

There is lots of room for innovation, and application of best practices from non hadoop based structures.

But some guidelines should be followed. These guidelines should be consistent with the rest of your data architecture.

Otherwise things will fall into disarray quickly indeed.

One way to organize the movement of data into your data lake, as well as were the summary data goes later is to use a Data Structure Graph this will also help you with the rest of your data architecture.

I could go into lots of details about how to set up a Hadoop cluster, but things evolve quickly in that space, and I am sure anything I write would be outdated quickly.

I encourage you to look for a practice VM to work with like : the cloudera quick start VM

Practice, try new things, then move them to production. If you need help, reach out, there are many sources to find good advice for setting up your hadoop environment.

While the assumption generally made is thinking of Hadoop and Big Data to be the same thing, many database systems I have worked on have been clustered.

Oracle, MySQL, SQL Server can all be cluster based.

While Hive is a great tool to leverage your existing SQL knowledge base, limiting your problem solving to SQL based access will impact the types of problems you can solve with your data.

Having been a DBA for many years, I clearly see the advantage of simply adding more machines to your cluster, and either doing a re-balance or suddenly having more space without the need for a data migration.

Early on in my career, if we were running out of space, we would have to go through a request process, then a migration downtime was undertaken to get the new space allocated to the right database.

Now, all you need to do (with Hadoop anyway) is to add another machine to the cluster.

There are some distinct similarities between a Big Data project and a data warehouse.

1) You must have a problem in mind to solve.

2) You must have executive buy in and sponsorship. Even if this is a research project you must have the resources to actually build your cluster, then move data onto the cluster.

3) The data must be organized. This will probably not be a normalization scheme like we saw previously from Codd. You should place similar data near each other. (Logs should be organized in folders for logs, RDBMS extracts should be organized in folders named in a similar manner to the original system schemas.)

There is lots of room for innovation, and application of best practices from non hadoop based structures.

But some guidelines should be followed. These guidelines should be consistent with the rest of your data architecture.

Otherwise things will fall into disarray quickly indeed.

One way to organize the movement of data into your data lake, as well as were the summary data goes later is to use a Data Structure Graph this will also help you with the rest of your data architecture.

I could go into lots of details about how to set up a Hadoop cluster, but things evolve quickly in that space, and I am sure anything I write would be outdated quickly.

I encourage you to look for a practice VM to work with like : the cloudera quick start VM

Practice, try new things, then move them to production. If you need help, reach out, there are many sources to find good advice for setting up your hadoop environment.