|

| English: A 4-node graph for illustrating concepts in transportation geography and network science. (Photo credit: Wikipedia) |

Recently at the Data Modeling Zone conference I was asked how to identify a problem as something that should be solved with graph tools.

The difficulty, I think, for data modelers is that many of us with a relational background tend to think about the relationships of our data structures.

This table is related to this other table with a one-to-one relationship, or one-to-many, or many-to-one, or even many-to-many relationship.

There are whole books devoted to discussing how to create relational data models that support these relationships.

I wrote a little about Graph fundamentals here: http://bit.ly/GraphFundamentals, but applying these atomic definitions to a real world problem can be a bit of a stretch.

There are, however, a few words to key in on.

Path:

What is the path that a customer takes through our store?

|

| English: Precedence graph Based on :Image:Directed.svg (Photo credit: Wikipedia) |

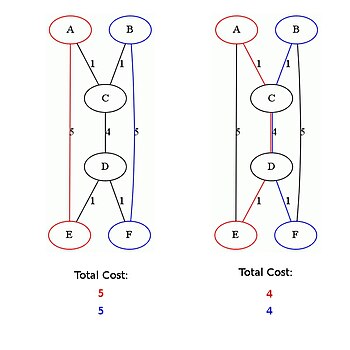

A path is about more than just the relationship between two things. It is about the relationship of many things, and how something (like a customer, or some data ) flows through the graph.

Learning the optimal path through a set of obstacles would require some iterative path analysis work.

These types of path questions are common in the human resource domain from the perspective of career path.

|

| A segment of a social network (Photo credit: Wikipedia) |

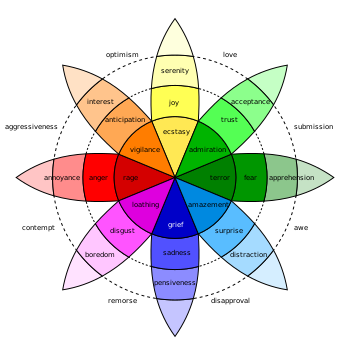

Social Network Analysis is one of the practical applications of graph theory.

Milgrams experiments are key touch-points that are commonly mentioned trying to understand the degrees of separation of two items. How often do people speak to one another? Does Ann talk to Bob, then speak to Charlie all the time?

If Ann says something positive about your brand, will Bob and Charlie both like your product?

If Ann says something negative will your stock price go down?

Who is talking about your products and who is listening to them?

|

| English: Example of the Shared Shortest Path Problem (Photo credit: Wikipedia) |

Data Itself:

My thoughts about understanding how data movement, and data structures themselves can be thought of as a graph, I have written about previously: http://bit.ly/DataStructureGraph

Some other terms in similar context are Data Lineage, and Data Pipeline.

How does data flow through your organization?

How does it flow into your organization?

How does it flow out of your organization?

Once in your organization how many systems does the same data flow into and out of without enrichment?

Does this data really need to go into those systems?

Movement:

How does a thing (Package, Product, Person, or Participant) move that your company interacts with? Rarely does it move from only one place to another.

Each step in the thing moving from one place to another is part of a path mentioned above.

You may think that a product moving from a shelf, to a box, then on to a truck for delivery to a customer can all be handled by individual applications. This is entirely possible. the value to doing graph analysis is new insight into existing data.

I would never suggest that Graph Analysis or Network Science is the only way to look at a problem.

I would suggest hat these tools can provide new or unique insight into the problems where businesses are trying to solve problems related to :Paths, people, Data, or Movement.

After all, Data Science applies a fresh perspective on our existing world.

We should all be trying to achieve more with our data.